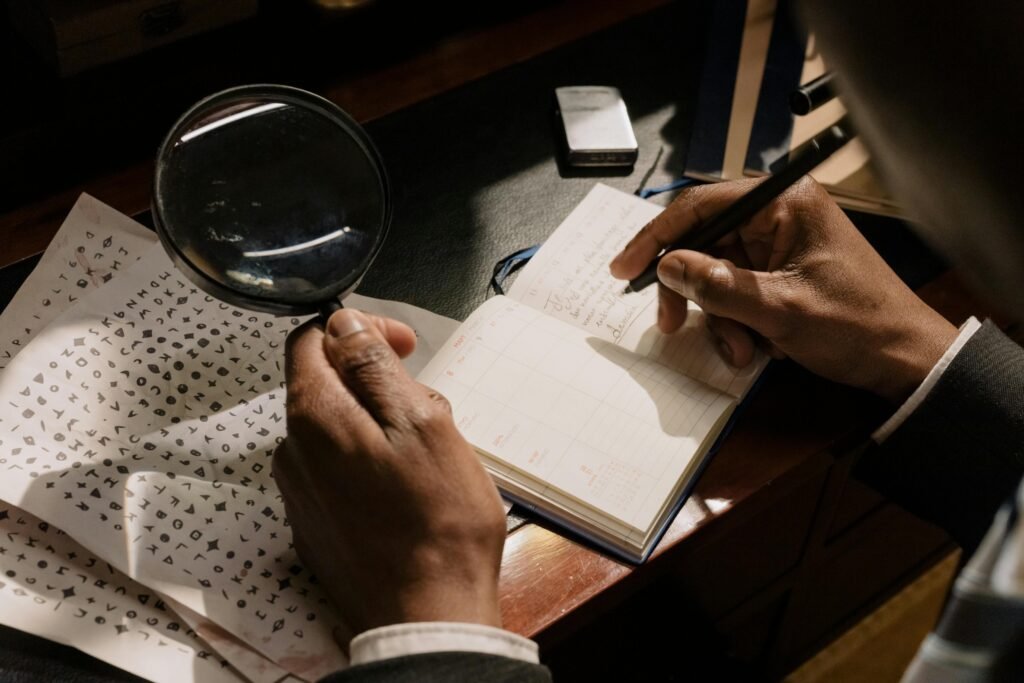

Imagine you’re trying to crack a secret code hidden in a dataset. Maximum likelihood estimation (MLE) is like being a detective searching for the most likely suspect among a group of people. You examine various probability distributions (suspects) and their parameters to find the one that best fits the data (crime scene).

Now, here’s where it gets tricky: sometimes, there are hidden variables that influence the outcome but are not directly observable. This is where the expectation-maximization (EM) algorithm comes in. It’s like having a team of undercover agents help you solve the case.

The EM algorithm works by first making an educated guess about the hidden variables, then updating its model based on that guess, and repeating these steps until it converges on the best solution.

To make this concept more relatable, let’s use an example of a group of friends trying to plan a surprise party. They know some friends’ schedules (observed data) but not everyone’s (latent variables). Using the EM algorithm, they make guesses about the missing schedules, adjust their plans, and keep refining them until they have a solid party plan.

Maximum Likelihood Estimation (MLE): Maximum likelihood estimation is a method for estimating the parameters of a statistical model. It involves finding the set of parameter values that maximize the likelihood function, which measures the likelihood of the observed data given the model.

Example: Imagine you have a bag of marbles, and you want to estimate the proportion of red marbles in the bag. MLE would involve selecting the proportion of red marbles that makes the observed data (the marbles you actually see) most likely.

Expectation-Maximization Algorithm (EM): The expectation-maximization algorithm is an iterative method for finding maximum likelihood estimates in the presence of latent variables. It alternates between two steps: an expectation (E) step, where the expected values of the latent variables are computed, and a maximization (M) step, where the parameters of the model are updated to maximize the likelihood function based on the expected values computed in the E step.

Example: Suppose you have a group of students with varying levels of ability in a classroom (latent variables), and you want to estimate the difficulty of exam questions. The EM algorithm would iteratively estimate the students’ abilities and adjust the difficulty of the questions until it converges to the best estimates.

We will be discussing its mathematical part in another post. Stay connected.